Knowledge Drops

Jun 4, 2025

Understanding HIPAA Compliance for AI in Healthcare

When implementing AI in healthcare settings, HIPAA compliance is a critical concern. The Health Insurance Portability and Accountability Act (HIPAA) establishes national standards to protect individuals' medical records and other personal health information. Here's what healthcare administrators need to understand about LLMs and HIPAA:

LLMs and Protected Health Information (PHI)

LLMs like ChatGPT and Claude were trained on vast amounts of internet data. When you interact with these models through standard interfaces:

- Any PHI you share is typically sent to the vendor's servers for processing

- This data may be stored, logged, or even used to further train their models

- Without proper safeguards, this constitutes a potential HIPAA violation

Approaches to HIPAA-Compliant AI Implementation

Today's leading AI vendors have developed robust solutions that enable secure, compliant use of AI with patient data. These enterprise-grade offerings make it possible to leverage powerful AI capabilities while maintaining the highest standards of data protection:

Business Associate Agreements (BAAs): Major vendors like OpenAI, Anthropic, and Microsoft now offer enterprise tiers with comprehensive BAAs, legally committing them to handle PHI according to HIPAA standards. These agreements provide strong legal protections and clearly defined responsibilities.

End-to-end encryption: Enterprise AI platforms typically implement strong encryption for data both in transit and at rest, ensuring PHI remains protected throughout the entire process.

Zero-retention policies: Many vendors now offer specialized API endpoints with zero-retention guarantees, meaning your data is processed without being stored or used for model training. These secure channels create a pathway for using state-of-the-art AI while maintaining complete data privacy.

Secure cloud environments: Leading providers deploy their enterprise AI solutions in HIPAA-compliant cloud environments with comprehensive security controls, often exceeding regulatory requirements.

On-premises or private cloud deployment: For maximum control, some vendors offer deployment options that keep smaller, specialized LLMs entirely within your own secure infrastructure. While this approach requires more technical resources, it ensures no PHI ever leaves your control.

With these robust safeguards in place, healthcare organizations can confidently implement AI solutions for handling PHI when working with reputable vendors who understand healthcare's unique compliance requirements.

Risk Management Best Practices

To minimize risks when implementing AI solutions in healthcare:

- Conduct thorough vendor due diligence, focusing on their privacy practices and HIPAA expertise

- Implement strong access controls limiting which staff can use AI tools that might access PHI

- Create clear policies about what information can and cannot be shared with AI systems

- Maintain comprehensive audit trails of all AI system interactions involving patient data

- Regularly test and verify that your AI systems and processes maintain compliance

Remember that HIPAA compliance is ultimately your organization's responsibility, even when working with vendors who claim to be "HIPAA compliant." Always consult with legal experts specializing in healthcare compliance when implementing any new AI technology.

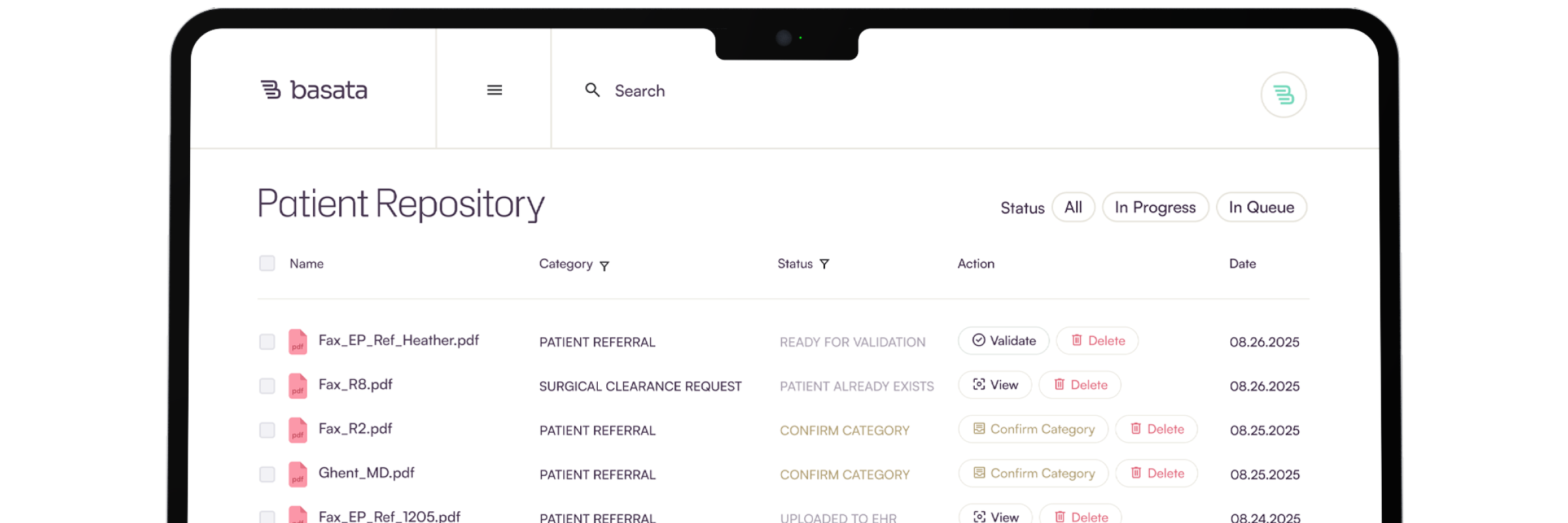

Curious about Basata? Learn how you can give your admin team superpowers.

See related articles

Ready for your administrative breakthrough?

Get in touch today and learn how Basata can give your admin team superpowers.