Knowledge Drops

Jun 1, 2025

Guide to AI for Medical Practice Administration Leaders

The healthcare industry is experiencing a technological revolution, and artificial intelligence sits at its center. If you're feeling overwhelmed by the constant buzz around AI, or skeptical about whether it lives up to the hype, you're not alone. Many healthcare leaders find themselves caught between the promise of transformative technology and the reality of running a busy medical practice with real patients, real deadlines, and real budget constraints.

As leaders in the healthcare industry, you've likely heard countless mentions of "AI" or "artificial intelligence" in recent years. From news headlines to vendor pitches, AI seems to be everywhere. But what exactly is it, and how can it genuinely benefit the administration of your medical practice?

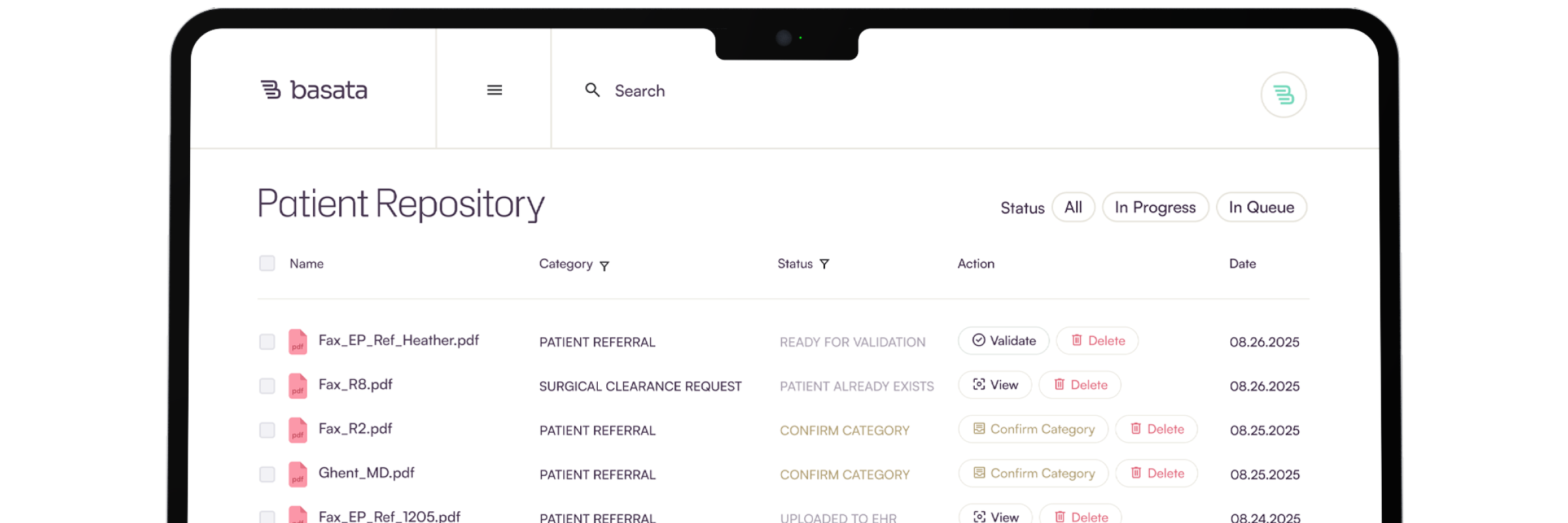

Picture this: your practice finally conquering that never-ending administrative backlog. No more perpetually understaffed departments struggling to keep up. The call center, front office, and back office are all winning at their jobs every day. Ballooning administrative costs now start shrinking. And perhaps most importantly, your team focuses on what truly matters: patient care. AI automation and/or semi-automation imbues your team with admin superpowers.

But here's the challenge, the market is flooded with vendors making big promises, using technical jargon designed to impress rather than inform. We've created this guide to demystify AI concepts and help you make informed decisions about working with AI vendors and integrating this technology into your practice operations.

What Is Artificial Intelligence?

At its core, artificial intelligence refers to computer systems designed to perform tasks that typically require human intelligence. Rather than following explicitly programmed instructions for every possible scenario, modern AI systems can learn from examples and adapt to new inputs and situations.

Despite science fiction portrayals, AI is not a robot or a sentient being like Data from Star Trek. AI is simply a very sophisticated mathematical equation with billions of coefficients.

What makes AI so remarkable is that this math equation can produce language and behaviors that appear incredibly human-like. AI can generate text, audio, images, and video content that seems thoughtful, reasoned, and realistic, yet it has no actual understanding, consciousness, or sentience.

When an AI system appears to "think" or "understand, it is actually calculating probabilities. The model computes which outputs are statistically most likely to follow given inputs based on patterns extracted from training data. Seeing mathematics alone can create such seemingly intelligent outputs makes AI both mindblowing and unsettling.

What’s different about Generative AI?

To grasp the new world of generative AI, let’s understand how it compares to traditional machine learning.

Traditional Machine Learning

Think of traditional machine learning as a specialist—highly trained to do specific job really well:

- Each model has one job, like predicting which patients might be readmitted or spotting unusual billing patterns

- The model has to be trained for its job with large volumes of high-quality training data

- The outputs are typically predictions, classifications, or recommendations

For example, you might use a traditional machine learning model to analyze patient demographics, diagnoses, and treatment histories to flag high-risk patients for follow-up. This model would be great at this specific task—but ask it to handle insurance verification or process documents, and it would be completely lost. You'd need entirely different models for those tasks, each requiring its own development and training.

Generative AI and Large Language Models

Generative AI—especially Large Language Models (LLMs)—is more like that incredibly versatile team member who can handle almost anything you throw their way:

- These systems train on massive amounts of text from across the internet and beyond

- They can tackle a wide variety of language tasks including summarization, creative writing, data extraction from text, and much more

- They absorb language patterns during initial training, then get fine-tuned to be even more helpful

ChatGPT is probably the most famous example—and chances are you've already played with it. Think about the range of tasks it handles effortlessly. In a single coffee break, you might use it to draft an email to your insurance partners, explain a complex diagnosis in patient-friendly language, and brainstorm content for your a newsletter. The same tool can summarize a dense research paper or help you think through a tricky staff scheduling problem. That versatility is what makes this technology so game-changing. Instead of a dozen different specialized tools, one well-trained system can handle all these tasks with impressive skill.

AI 101: Fundamentals

What is an LLM?

An LLM stands for "Large Language Model" - a sophisticated computer program that excels at understanding and using human language. Think of it like having an assistant who has read millions of books, articles, and websites, with an incredible memory for patterns in how people write and speak. When you ask this assistant a question, they can respond naturally and helpfully because they've absorbed countless examples of human communication.

Instead of a person doing all that reading, an LLM is a computer program trained on massive amounts of text from the internet, learning patterns about which words go together, how to structure sentences, and how to answer different questions. When you type something to an LLM, it uses these learned patterns to predict what words should come next, generating responses that sound human-like without actually thinking the way humans do. The "large" aspect refers to the enormous datasets and computing power involved, which enables these models to excel at conversations, answering questions, writing assistance, and many other language-based tasks.

How LLMs Work: From Training to Usage

LLMs function through a deceptively simple core mechanism: predicting what word should come next in a sequence based on the context of previous words. Let's break this down into how these models are built and then how we actually use them:

How LLMs Are Trained

Creating these powerful models involves several distinct phases:

- 🔄 Pre-training: The model is exposed to billions of text examples from books, websites, articles, and other sources, learning statistical patterns in language. This is like the model reading a significant portion of the internet to understand how language works.

- ⚙️ Fine-tuning: A process where the model is further trained on specific datasets to enhance its capabilities for particular tasks or domains. This specialized training helps the model generate more relevant, accurate responses for specific applications.

- 🔍 RLHF (Reinforcement Learning from Human Feedback): A technique where human evaluators rate the model's outputs, creating a feedback loop that helps align the model with human preferences and values. RLHF teaches models which responses are helpful versus harmful or inaccurate.

What Happens When You Use an LLM

When you interact with an AI system like ChatGPT, here's what's actually happening: The system takes everything in your conversation—your questions, its previous responses, any context you've provided—and uses this complete history to predict what text should come next. It generates one word, adds that word to the conversation history, then uses this updated context to predict the next word, and so on. Each new word becomes part of the context that influences all subsequent words. This chain reaction continues until the response is complete. AI experts call this process "inference"—you'll hear this term when vendors describe how their systems operate in real-time.

Limitations of LLMs: Understanding Hallucinations

LLMs can perform an impressive range of tasks without task-specific programming.

However, one significant limitation of LLMs is their tendency to "hallucinate" — generating content that sounds plausible but is factually incorrect or entirely fabricated.

Why do hallucinations occur? Remember that these models fundamentally work by predicting what text should come next based on patterns they've observed, not by accessing a database of verified facts. When an LLM lacks information or encounters ambiguity, it may generate a plausible-sounding but incorrect response rather than acknowledging uncertainty.

In healthcare settings, this presents obvious risks. An AI system that confidently provides incorrect information about medical procedures, billing codes, or patient data could create significant problems. This is why responsible AI implementation in healthcare requires measures to mitigate hallucinations, which could include human oversight (“human-in-the-loop”), integration with verified information sources, or clear processes for validation AI outputs - as just a few examples.

Understanding these limitations is essential for implementing AI responsibly in healthcare administrative settings.

Curious about Basata? Learn how you can give your admin team superpowers.

See related articles

Ready for your administrative breakthrough?

Get in touch today and learn how Basata can give your admin team superpowers.